Do queues in the ladies’ toilets signify success at an IT conference? Is a half-hour sit-down worth more than a T shirt? These and other deep questions pondered.

Capgemini’s Cloud Development team are returning from our annual pilgrimage to Devoxx UK, the best and biggest annual IT developer conference in the UK. As is becoming usual, a train strike limited my attendance on the Wednesday, but even in a couple of days there has been much to inspire and mull over. This year, instead of a full booth, Capgemini sponsored a corner filled with super-comfy bean bags, and it was the most popular our area has ever been! As always it felt like a very inclusive conference, with a wide range of age groups and nationalities, and a good balance of genders and races present.

AI Again

As with last year there was a strong theme of AI across many of the conference talks, but this year the emphasis was more on developer involvement - less “Wow look what this can do”, and more “This is how you can build things”. Some angles were difficult to see a production use-case for - for example, Developing Intelligent Apps with Semantic Kernel showcased how with the Semantic Kernel Java API you can allow generative AI access to call your Java functions whenever it considers them appropriate. Having recently taken Capgemini’s “Responsible AI” training course and appreciating that generative AI’s decision making process cannot be considered “correct”, and so you could only use this scenario when it didn’t matter if the AI got the decision to make the Java call wrong, this really limits usage. Deciding when to delete development resources, perhaps? Or rotating a “thought for the day” message? Nothing more important, please! The speaker’s (inevitable) example of roleplaying games seemed safe enough. More AI concerns in Harel Avissar’s talk “The State of Malicious ML Models Attacks”. There are now popular repositories such as HuggingFace where people can share their pre-trained large language models (LLMs), which once trained are, as Harel pointed out, basically deployable units the same as any other software artifact. As such, they are open to attack in the same way as other pipeline artifacts and need to be signed and secured in the same way. This is quite a new field for attacks, but when scanning the LLMs available to download they did find some examples where LLM pickle (.PKL) files, which can run Python scripts when you open them, contained malicious “reverse shell attack” code. Something to be aware of.

AI and Vectors

Quite a few talks focussed on looking at vector databases, commonly used in LLMs, and the algorithms needed to search them. Vector types can represent the relationships between concepts across multiple dimensions. Mary Grygleski’s talk on Friday covered how ChatGPT’s vectors represent 1,536 dimensions. This would mean, for example, it could store 1,536 different contexts for the word “cat”. Handy. But not brand new, and not specific to generative AI - Elasticsearch for example has been using a vector database and the HNSW search algorithm to great effect in its fuzzy searches for years. In fact, as I discovered in my last blog post, the powers of fuzzy search and natural language processing are often more real-world useful than generative AI. You don’t usually want a computer system to come up with its own answer to a question, you want it to go away and find a definitive answer!

Thoughtful Architecture

Conference regular Andrew Harmel-Law was back with what some has said is his best talk yet - “How we Decide”. I didn’t attend as I had recently seen Dave Snowden’s talk on “being human in an age of technology”, in which he went into detail on the biological human process of decision-making - for example, did you know that groups of 5 or less will always reach consensus? Possibly because this is a common family group size, allowing ancient humans to move quickly in family units. And did you know that only 4 in 5 of your decisions are made by the conscious brain, with the other one coming pretty much straight from the body’s senses? Things like pheromones have a big impact on decision-making when face to face. I was still processing this information so skipped Andrew’s talk for a deep dive into container-based IDEs, but I feel I missed out and am looking forward to the video! I did attend Barry O’Reilly’s joyous “An Introduction to Residuality Theory”. Any talk that begins with a Douglas Adams quote and goes on to arm the attending developers with some REALLY DIFFICULT questions to put to enterprise architects (especially difficult if you don’t allow them to use the word “magic”) is always going to go down well, and O’Reilly went on to confirm my suspicions that the way we approach software architecture is less science and more art. He pointed out that our architecture diagrams don’t capture time, change or uncertainty - hugely important factors in software engineering - and how good software architects must be really comfortable with the concept of uncertainty. He had lots of great quotes about how Agile is a reaction to the realisation that requirements don’t work for complex systems, but that using Agile and reacting to change when problems arise leads to flaky architectures. His revelation, and the topic of his Ph.D thesis, is that software architecture could be a science, if we use methods from complexity theory. He went on to outline some really excellent ideas about how to robustify your candidate architecture using random simulation and ideas based on Kauffman’s networks and attractors. Plus, what to do if fire-breathing lizards should happen to climb out of the Thames. Forewarned is forearmed!

More microservices

Microservices haven’t gone away from Devoxx, and this year there were a couple of talks debating / re-debating the monolith v microservice argument. I especially enjoyed Chris Simon’s Modular Monoliths & Microservices - A 4+1 View Continuum in which he advised us all not to bother with the debate as both terms were becoming meaningless! Even a monolith is probably a distributed system. And if you view your client browsers as a scaled-out cluster of your application front end, which you probably should, even the simplest static website becomes a vast distributed network. He recommended moving away from the terms microservice / monolith and instead directly using terms such as “process” (a thing you can start and stop), and “node” for distributed compute, and teams and repositories for code ownership. He talked about the power of Domain Driven Design (DDD) when building up the logical view of your system, and how mapping processes onto bounded contexts can give you a candidate physical architecture, although don’t shy away from multiple processes in a bounded context if, for example, there are asynchronous steps between multiple processes (i.e. a queue). He also gave the quote of the conference when he said people often found they had a service and “distributed the wazoo out of it and we don’t know why”. He talked about the development view of an architecture and how your code repository should always align with the logical view. If this doesn’t happen you end up with inter team coupling - behaviours such as “Scrum of Scrums” mean that it is becoming very hard to get decisions made and is a clue that your development view isn’t in alignment. He also talked about how we should reconsider our assumptions on the physical view of the architecture - we often jump to the conclusion that a microservice needs its own infrastructure but this may not be the case, and we often assume that a repository must be deployed as a monolith when in fact you can use path filters on your monorepo to deploy separate sections at different times.

Fear of Rust

For something new, I went to see Ayoub Alouane talk about “My discovery of Rust: Why is it a Game Changer?” where he showed an example of Discord’s “Message Marker” app showing regular spikes in CPU that corresponded with garbage collection. The corresponding Rust application had no such spikes. Why? Rust, announces Ayoub, does its memory management at compile time! He went on to demonstrate with a simple loop application how, for each variable, you had to specify the memory details of the variable:

- Is it mutable?

- Is it usable in multiple threads?

- Is it lockable?

- Should you clone it into threads?

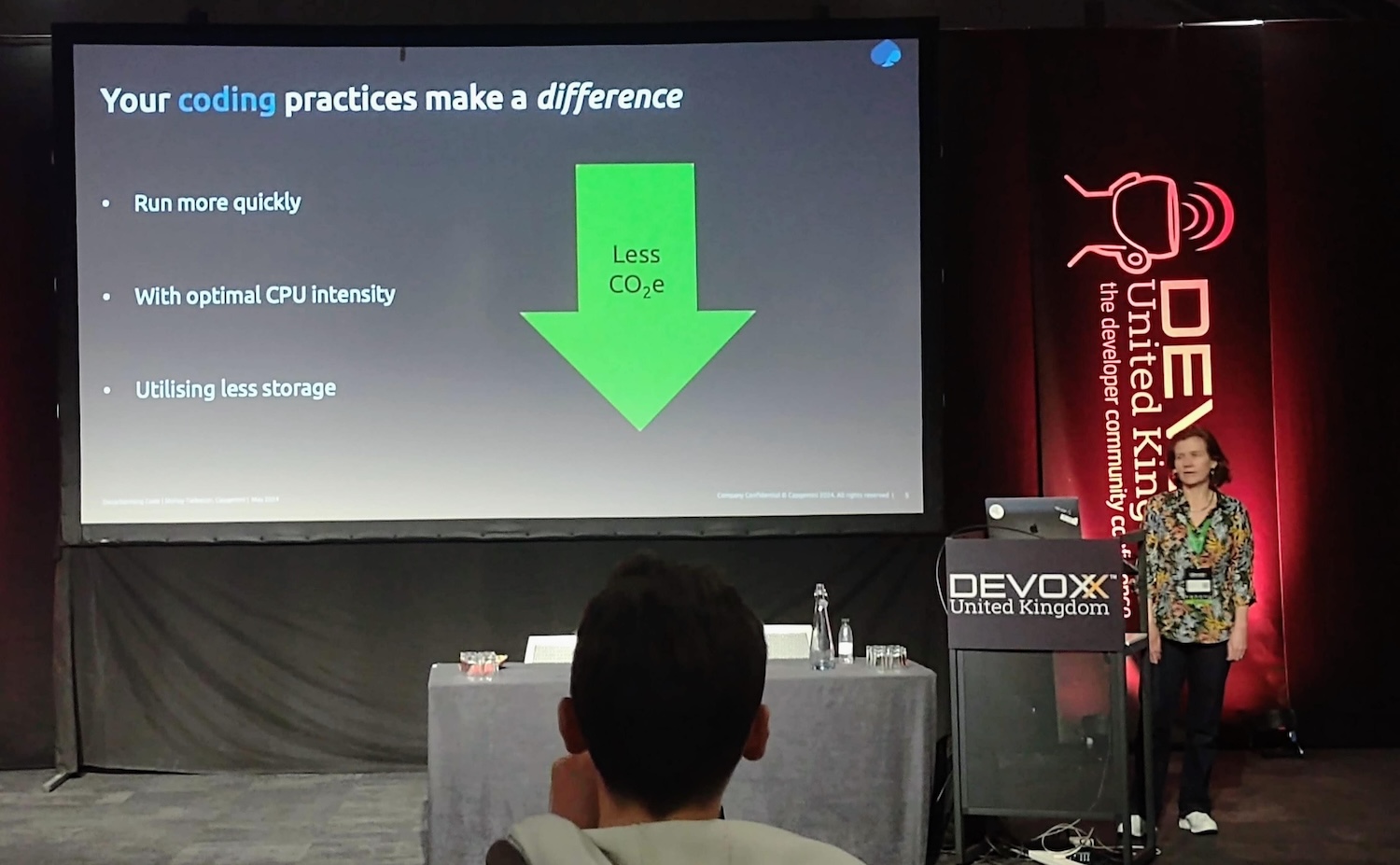

All this can be expressed through the syntax of the language. A bit of a pain to learn, but well worth it. A lot of end-of-life C applications are being migrated to Rust, and after this talk I can understand why. He gave another example of rewriting a node.js app in Rust and using 95% less memory and 75% less CPU! OK, game-changing point taken! This talk of application efficiency fit well with our own Shirley Tarboton’s talk on Decarbonising Code. She gave some examples of easy-to-implement coding practices that can make your application consume fewer resources. It was a well-received talk in the huge auditorium theatre - quite a feat to get people to attend during lunch!

Crashing and burning

Last thing Thursday I popped into a talk called Mayday Mark 2! More Software Lessons From Aviation Disasters.. As speaker Adele Carpenter pointed out, the Venn diagram of software engineers and aviation geeks is, “basically a circle”. Previous aviation disaster talks I’ve been to focus on ways of working we can both benefit from - for example, focussing on psychological safety, failing fast and failing openly. Adele’s talk, however, was a rather harrowing blow-by-blow account of some major air crashes from the past few decades. There were some important lessons about how much information humans can cope with, how humans react unexpectedly in stressful situations, the importance of familiarity and expectations in UI design, but overall it was a rather sobering affair. Which is just as well, since we all poured straight out of the talk and into the local pub for the famous Devoxx party, complete with IT-branded beers. Cheers for another year!

Continuous Change

On Friday I went to a talk very relevant to my current project - Chris Simon’s “Winning at Continuous Deployment with the Expand/Contract Pattern”. My project has a fairly typical Continuous Integration pipeline, but we still do production releases via manual intervention on a business schedule. One of the reasons is schema changes which cause backward incompatibility between services - this can cause outages if our server-side (message recipient side) services are updated before the client side (message sender) services - because even if they manage to process the old-style message, they may send a response the new client isn’t expecting. So whilst we have eventual consistency, a few requests may get lost along the way during the service restart. The Expand/Contract pattern addresses this by putting the onus on developers to create an “interim” server-side application which can accept both old and new client messages, and sends both old and new response formats. So if you used to have an application that took a username and returned OK like this

{

"name": "Sarah Saunders"

}

{

"response": "OK"

}

and the new version would take first name / last name and return OK and an ID:

{ "name": {

"first": "Sarah",

"last": "Saunders"

}}

{

"id": "2134-ker-438052u"

}

In this scenario, the “interim” application would accept both inputs, and would return an output like this:

{

"response": "OK",

"id": "2134-ker-438052u"

}

This should allow client applications to talk to the server both before and after they are migrated to the new version. Then, once all clients are migrated, the “Contract” stage of the release occurs and the server can be moved to only accept/respond in a V2 style.

I really like this pattern and I don’t think developers would mind writing the extra code for the interim release. It does spell out though, that deployment must be a consideration right from the design stage of an application, and Chris did raise this point at the start - mentioning his other talk goes into more detail about how Test Driven Development (TDD) helps this by defining strong contractual interfaces between components, and is a strong enabler for continuous deployment. I have long been a strong advocate of TDD, and here is yet another reason why getting ChatGPT to write your tests for you is no substitute at all for proper TDD!

In conclusion, a really great conference for affirming that we (Capgemini Cloud Development team) are on the right lines with the software and architectures we are currently building for our clients, our use and understanding of AI aligns well with the global community (or is sometimes a little bit ahead) and we have lots of great lessons to learn to improve our architecture and deployment practices even further. Looking forward to the next year.